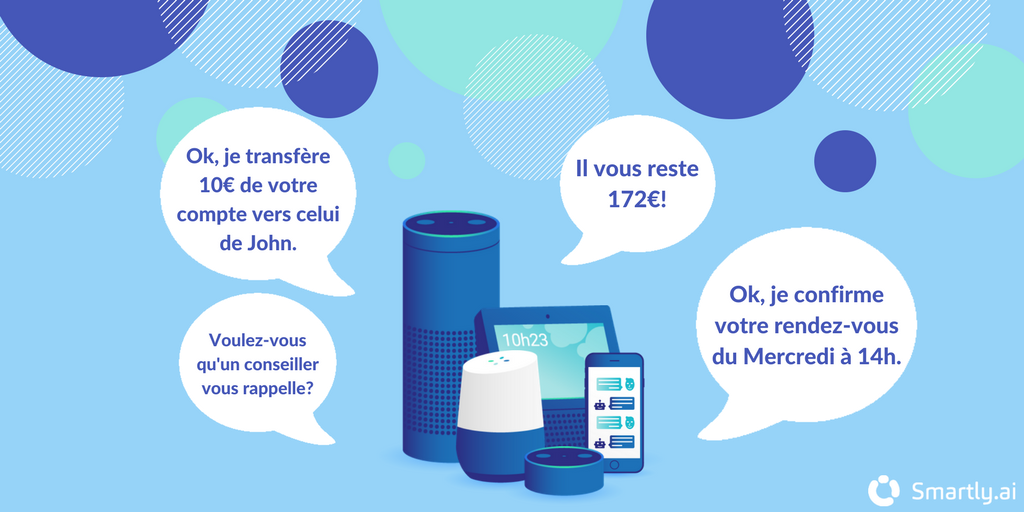

Smartly.ai est un logiciel d’automatisation de la Relation Client.

Nos chatbots soulagent vos équipes, réduisent le temps de réponse et améliorent la satisfaction client.

© 2012-2024 – Vocal Apps SA

Smartly.ai est un logiciel d’automatisation de la Relation Client.

Nos chatbots soulagent vos équipes, réduisent le temps de réponse et améliorent la satisfaction client.

© 2012-2024 – Vocal Apps SA