07 Juin

🔊Les notifications sur assistants vocaux, génial ou agaçant ?

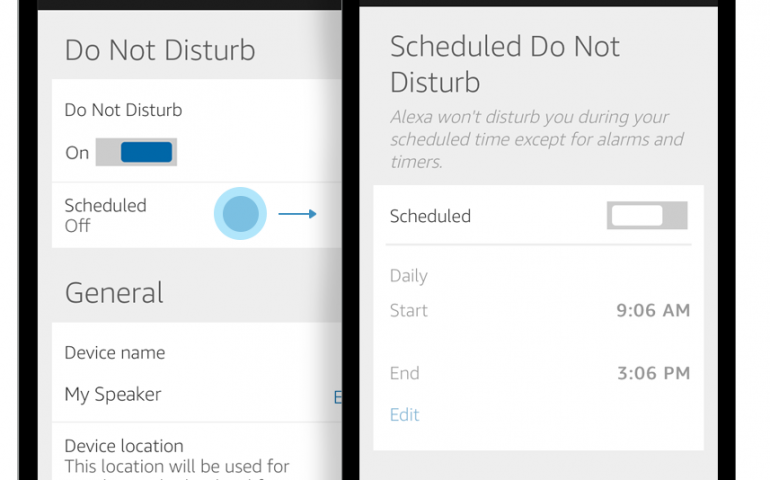

Amazon et Google ont tous deux récemment annoncé que leurs appareils connectés respectifs vont pouvoir accueillir des notifications proactives. Au lieu de simplement réagir à vos demandes, ils seront dorénavant en mesure de s’activer lorsqu’ils auront quelque chose à dire.